AnySat: An Earth Observation Model

for Any Resolutions, Scales, and Modalities

CVPR 2025 (Highlight)

Introducing AnySat

AnySat is a multimodal model based on joint embedding predictive architecture (JEPA) and resolution-adaptive spatial encoders, allowing us in a self-supervised manner to handle:

📏 Multiple Scales

From local to global observations

🔍 Various Resolutions

Spatial, spectral, and temporal

🛰️ Different Modalities

Multiple sensor combinations

Key Innovations

Shared Architecture

75% of parameters shared across all modalities and scales, enabling efficient multi-modal learning

Scale-Adaptive Design

Modified JEPA learning scheme with scale-adaptive spatial encoders for multi-resolution processing

Universal Compatibility

Handles data from 0.2m to 500m resolution, 3-12 channels, and areas from 0.3 to 2600 hectares

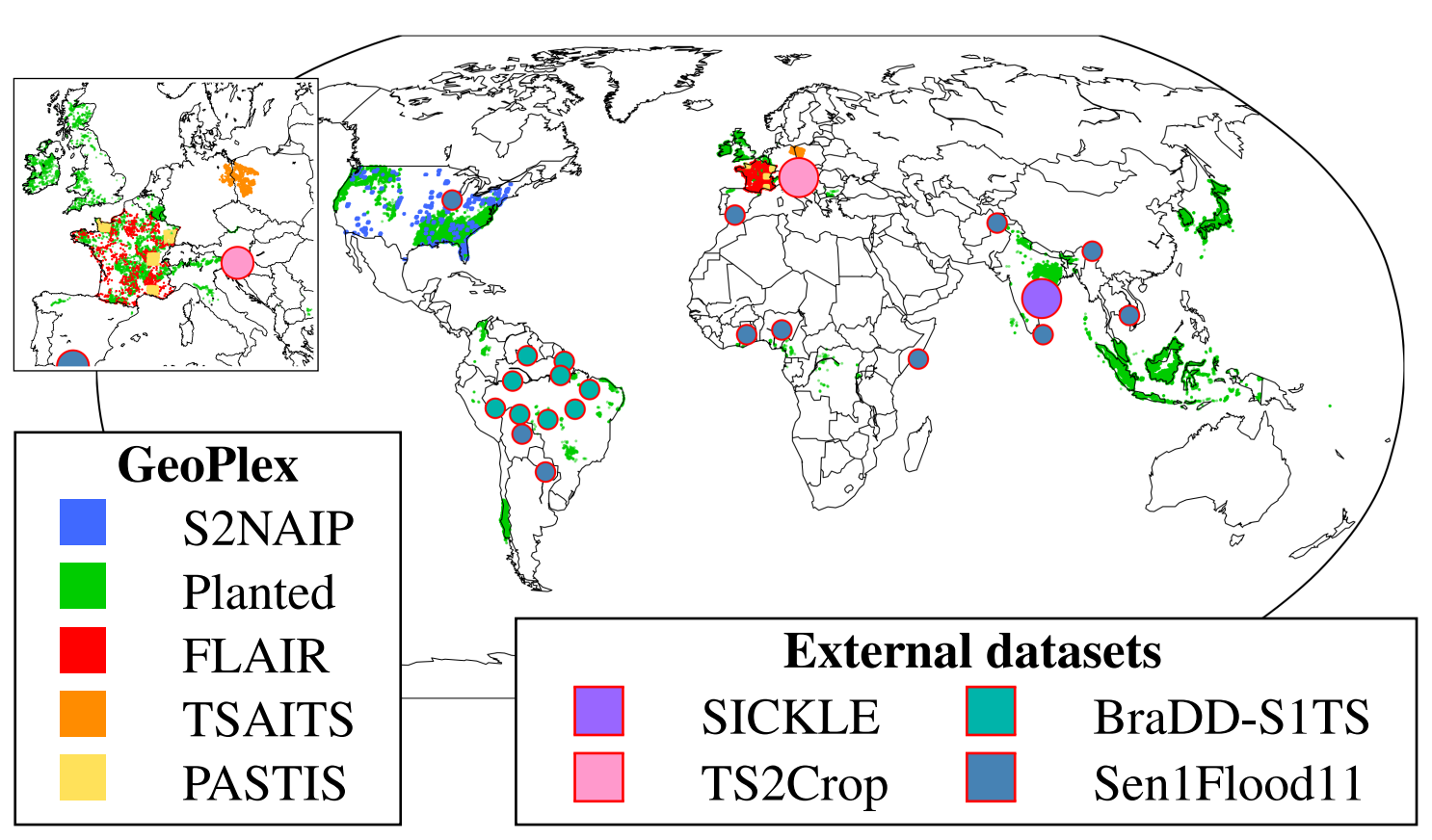

Datasets

We compile GeoPlex, a collection of multimodal datasets with varying characteristics to a single powerful model on these diverse datasets simultaneously. We argue quality and diversity over quantity.

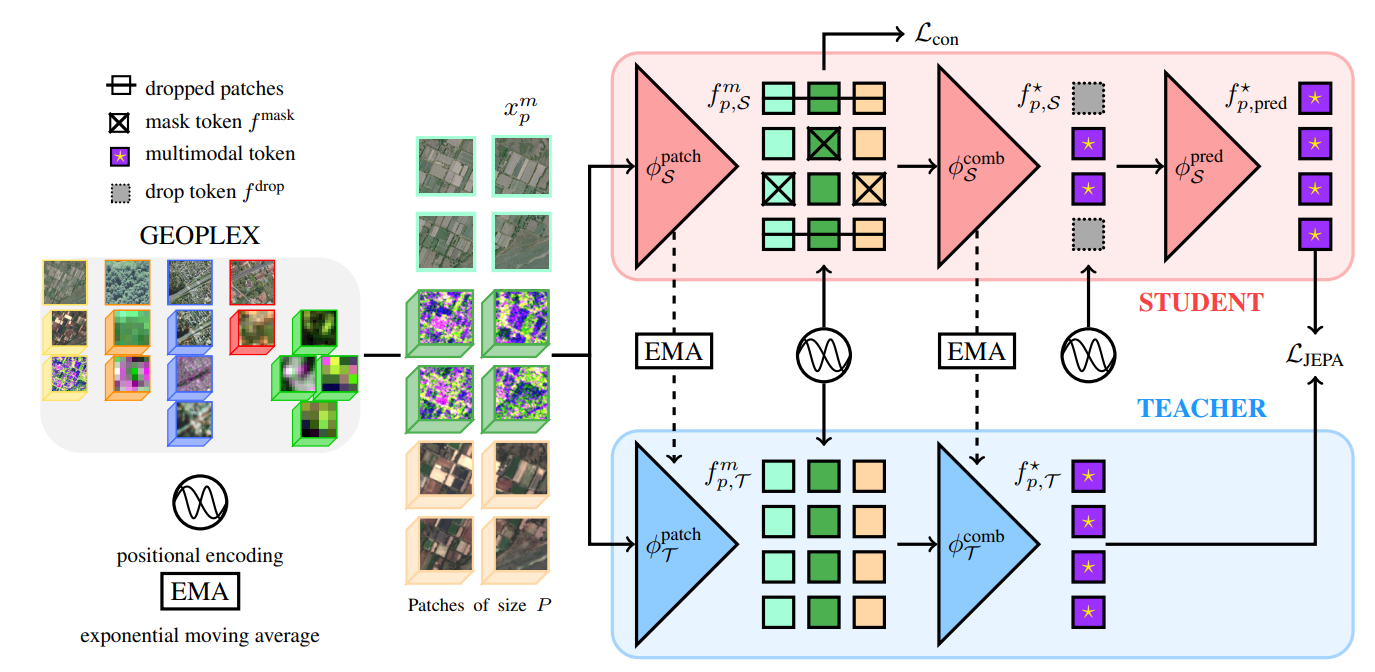

Architecture

Scale-Adaptive JEPA Design

AnySat employs a novel Joint Embedding Predictive Architecture (JEPA) that adapts to multiple spatial scales and resolutions. Key components include:

- Resolution-adaptive spatial encoders

- Multi-modal fusion mechanism

- Scale-aware prediction heads

- Self-supervised learning framework

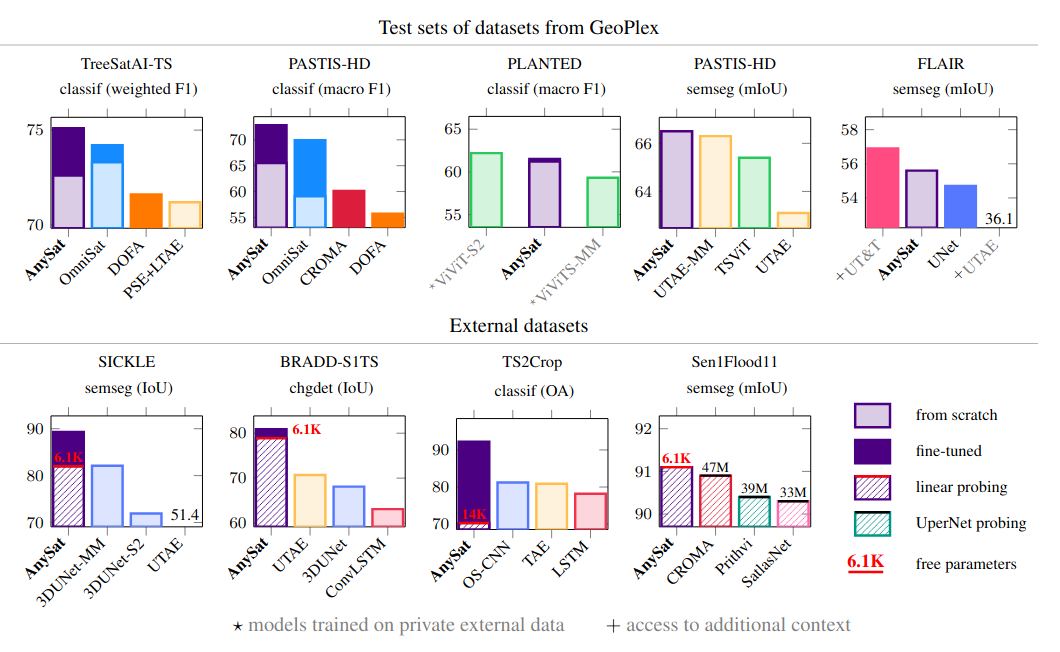

Results

Quick Start

import torch

import anysat

# Load the model

anysat_B = torch.hub.load('gastruc/anysat', 'anysat_B')

# Prepare your data

data = {

'naip':# NAIP single image B, 3, 24*H, 24*W

's2':# Sentinel-2 time series B, T1, 10, 3*H, 3*W

'alos':# ALOS-2 time series B, T2, 3, H, W

}

# Extract features

features = AnySat(data, patch_size=20) # patch_size in meters

Bibtex

@article{astruc2024anysat,

title={{AnySat: An Earth} Observation Model for Any Resolutions, Scales, and Modalities},

author={Astruc, Guillaume and Gonthier, Nicolas and Mallet, Clement and Landrieu, Loic},

journal={arXiv preprint arXiv:2412.14123},

year={2024}

}

Acknowledgements

This work was granted access to the HPC resources of IDRIS under the allocations AD011014719 and AD011014286R1 made by GENCI. We thank Jordi Inglada, Antoine Labatie, Dimitris Samaras, Yohann Perron, Vincent Lepetit for inspiring discussions and valuable feedback.